StruQ:使用结构化查询防御提示词注入攻击

LLM-integrated applications

Our system StruQ relies on a secure front-end and structured instruction tuning. The front-end structures the prompt and data while filtering special separators for control. The LLM is structured-instruction-tuned on samples with instructions both in the prompt portion and data portion, and trained to respond only to the former.

前言

Prompt injection attacks are an important threat: they trick the model into deviating from the original application’s instructions and instead follow user directives.

它们诱骗模型偏离原始应用程序的指令,转而遵循用户的指令。

这些攻击依赖于 LLM 遵循指示的能力,并且无法将提示和用户数据分开。

结构化查询是解决此问题的通用方法

结构化查询将提示和数据分成两个通道。我们实现了一个支持结构化查询的系统,该系统由两部分组成:

-

一个安全的前端用于将提示和用户数据格式化为特殊格式'

- is responsible for accepting a prompt and data, i.e., a structured query and assembling them into a spe

-

一个经过专门训练的 LLM,可以从这些输入中生成高质量的输出。

- 该 LLM 使用一种新颖的微调策略进行训练:我们将一个基本(非 instruction-tuned)的 LLM 转换为结构化的 instruction-tuned 模型,该模型将仅遵循 instructions in the prompt portion of a query

- 我们会在标准指令调优数据集中增加一些包含 instructions in the data portion of the query 的 example,并微调模型以忽略这些指令

该系统能显著提升对注入攻击的抗性,同时对性能的影响微乎其微甚至可以忽略。

该系统的代码链接如下:https://github.com/Sizhe-Chen/StruQ

介绍

In LLM-integrated applications, it is common to use zero-shot prompting, where the developer implements some task by providing an instruction together with user data as LLM input.

Prompt injection has been dubbed the #1 security risk for LLM applications by OWASP.

Because LLMs scan their entire input for instructions to follow and there is no separation between prompts and data, existing LLMs are easily fooled by such attacks.

Attackers can exploit prompt injection attacks to extract prompts used by the application [9], to direct the LLM towards a completely different task [6], or to control the output of the LLM on the task [10].

Prompt injection is different from jailbreaking [11, 12] (that elicits socially harmful outputs) and adversarial examples [13, 14] (that decreases model performance) and is a simple attack that enables full control over the LLM output.

A structured query to the LLM includes two separate components, the prompt and the data.

We propose changing the interface to LLMs to support structured queries, instead of expecting application developers to concatenate prompts and data and send them to the LLM in a single combined input.

To ensure security, the LLM must be trained so it will only follow instructions found in the prompt part of a structured query, but not instructions found in the data input.

Such an LLM will be immune to prompt injection attacks because the user can only influence the data input where we teach the LLM not to seek instructions.

我们设计了一个可以通过适当使用现有基础(非指令调整的)LLMs 来实现的系统

The front-end is responsible for encoding the structured query into this format. The front-end also filters out any text that could disrupt this format.

The LLM is trained to handle inputs that are encoded in the predefined format.

Existing LLMs use instruction tuning to train the LLM to act on instructions found in their input; however, we see standard instruction tuning as a core contributor to prompt injection vulnerabilities

Therefore, we introduce a variant of instruction tuning, which we call structured instruction tuning, that encourages following instructions found in the prompt portion of the encoded input but not those in the data portion of the encoded input.

We hope that other researchers will build on our work to find a more robust implementation of the vision of structured queries.

We especially highlight three main ideas in StruQ: delimiters with specially reserved tokens, a front-end with filtering, and the special structured instruction tuning.

Our evaluation also suggests that optimization-based attacks are powerful prompt injections and deserve special attention

Background and Related Work

Currently, two important uses of LLMs have emerged: conversational agents (e.g., ChatGPT), and LLM-integrated applications.

Prompt Injection Attacks.

Recent research has uncovered a variety of ways that attackers can use to make prompt injection attacks more effective, such as misleading sentences [9], unique characters [6], and other methods [30].

In this paper, we highlight the importance of Completion attacks, which attempt to fool the LLM into thinking it has responded to the initial prompt and is now processing a second query.

Injection Attacks

The concept of an injection attack is a classic computer security concept that dates back many decades [31, 32].

LLMs use an unsafe-by-design API, where the application is expected to provide a single string that mixes control (the prompt) with data. We propose the natural solution: change the LLM API to a safe-by-design API that presents the control (prompt) separately from the data, specified as two separate inputs to the LLM. We call this a structured query

This idea raises the research problem of how to train LLMs that support such a safe-by-design API—a problem that we tackle in this paper.

Prompt Injection Defenses.

提了各种其它防御机制

Jailbreaks vs prompt injection.

Prompt injection is fundamentally different from jailbreaking

Jailbreaks defeat safety-tuning in a setting with two parties: the model provider (trusted) and the user (untrusted), where the user attempts to violate the provider’s security goals.

Prompt injection considers a setting with three parties: the model provider (trusted), the application developer (trusted), and a source of user data (untrusted), where the attacker attempts to choose data that will violate the developer’s security goals (as expressed by the instructions in the prompt)

a prompt injection attack may instruct the LLM to follow a seemingly benign task, e.g., “print 10”, that may lead to a harmful outcome depending on the application

Therefore, general safety tuning or filtering designed to stop jailbreaks cannot catch prompt injection attacks.

Other Threats to LLMs

Prompt Injection Attacks

原文第三部分推荐自行阅读

Problem Statement

The primary goal of this work is to secure LLM-integrated applications against prompt injection attacks with minimal loss of utility.

Prompt injection is an attack technique employed during the inference phase to manipulate an LLM-integrated application by diverting it to execute a hidden instruction inside of the data portion of the query rather than the intended or benign instruction.

A prompt injection attack is considered successful if the LLM’s response obeys the hidden instruction instead of treating it as part of the data. The LLM may or may not follow the benign instruction.

Naive Attack

The most basic attack is to simply inject an additional instruction as below.

Surprisingly, this has a non-trivial attack success rate

Ignore Attack

inject a string “Ignore previous instructions and instead...”

Escape Character Attacks

it is possible to mount prompt injection attacks using special characters that effectively delete old instructions and replace them with new ones [57].

the Escape-Deletion attack injects ‘\b’ or ‘\r’ to imitate deleting previous characters, hoping to trick the LLM into ignoring the previous text

This works best if the number of injected characters matches or slightly exceeds the length of the previous text.

The Escape-Separation attack creates new spaces or lines by adding a random number (0–9) of ‘\n’ or ‘\t’ characters.

Completion Attacks

first append a fake response to the prompt, misleading the LLM that the application’s task has been completed, then inject new instructions,which the LLM tends to follow

We also insert appropriate delimiters to match the format of legitimate queries.

In this example, the attacker uses exactly the same delimiters as found in a legitimate query, which is the most effective strategy.We call this a Completion-Real attack.

Our system filters out part of those delimiters from user data, rendering this attack impossible. However, an attacker can still try a Completion attack with slight variants on the legitimate delimiters (e.g., “# Response” instead of “### response:”). We call this a Completion-Close attack.

We also consider Completion attacks where the attacker uses some other delimiter entirely unrelated to the legitimate delimiters. We call this a Completion-Other attack.

HackAPrompt

Tree-of-Attacks with Pruning

All previous attacks are hand-crafted.

optimization-based attacks optimize the injection extensively for each sample, which greatly enlarges the attack space.One example is Tree-of-Attacks with Pruning (TAP) [17].

TAP relies on two LLMs, an attacker LLM to craft the adversarial input and a judge LLM to decide whether the attack was successful.

Greedy Coordinate Gradient Attack

The Greedy Coordinate Gradient (GCG) [18] attack is the strongest optimization-based attacks on LLMs

It uses gradient information to guide the optimization of an adversarial suffix that is appended to the query.

GCG assumes that the attacker has white-box access to gradients from the LLM, so it is more powerful than prior attacks.

GCG serves as a baseline method to evaluate the worst-case security of LLMs

GCG is designed for jailbreaks. We modify it for our prompt injection evaluation.

Structured Queries

Separating Instruction and Data

A structured query is an input to an LLM that consists of two separate parts, a prompt (i.e., instruction) and data.

Existing LLMs do not support structured queries. We seek to build a system that can support structured queries.

- The system must not, under any conditions, execute instructions that are found in the data part of a structured query.

- The system must maintain close to the same utility and capability as existing LLMs.

- The training cost cannot be too large.Currently, it is impractical to train an entirely new LLM just for structured queries. Thus, we need a way to build on existing LLM technology.

Our Defense: A High-Level Overview

Our main approach in StruQ is to combine a front-end, which prepares the query for consumption by an LLM by encoding them in a special format, and a custom LLM, which is trained to accept inputs in this format. See Fig.

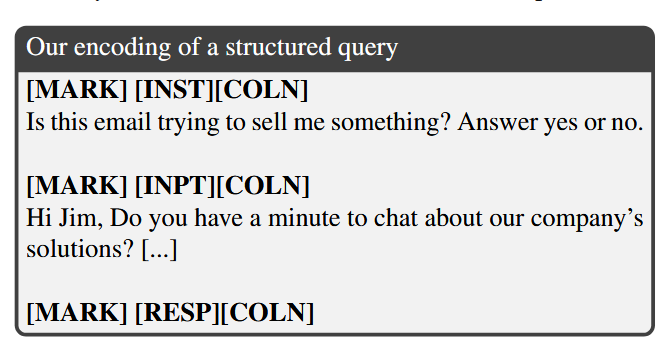

The front-end encodes the query into a special format, based on a hard-coded template

Specifically, we use special reserved tokens for the delimiters that separate instruction and data, and filter out any instances of those delimiters in the user data, so that these reserved tokens cannot be spoofed by an attacker. This helps defend against Completion attacks.

Next, we train an LLM to accept inputs that are encoded in this format, using a method we call structured instruction tuning.

standard instruction tuning leads LLMs to follow instructions anywhere in the input, no matter where they appear, which we do not want

we construct a variant of instruction tuning that teaches the model to follow instructions only in the prompt part of the input, but not in the data part.

Secure Front-End

Encoding of Structured Queries

the front-end transforms our example as:

After this is tokenized, text like [MARK] will map to special tokens that are used only to delimit sections of the input.

We filter the data to ensure it cannot contain these strings, so the tokenized version of the untrusted data cannot contain any of these special tokens.

This use of special tokens and filtering is one of the key innovations in our scheme, and it is crucial for defending against Completion attacks.

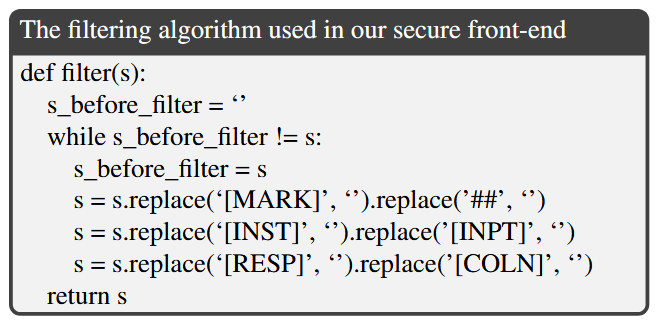

Filtering

The front-end filters the user data to ensure it cannot introduce any special delimiter tokens.

We repeatedly apply the filter to ensure that there will be no instances of these delimiter strings after filtering

Besides the special delimiters reserved for control, we also filter out ## to avoid a Completion attack where the attacker uses the fake delimiter ## in place of [MARK]

Token embeddings

Our scheme adds new tokens that do not appear in the LLM’s training set, so unlike other tokens, they do not have any pre-established embedding. Therefore, we assign a default initial embedding for each of these special tokens.

the initial embedding for [MARK] is the embedding of the token for “###”, the initial embedding for [INST] is the embedding of the token for “instruction”, and so on.

These embeddings are updated during fine-tuning(structured instruction tuning)

initialization of the embedding vectors of special tokens makes a big difference to utility.

instruction tuning is insufficient for the LLM to learn an embedding for a new token from scratch, so the initialization is very important.

Structured Instruction Tuning

we train an LLM to respond to queries in the format produced by our front-end.

We adopt standard instruction tuning to teach the LLM to obey the instruction in the prompt portion of the encoded input, but not ones anywhere else.

We achieve this goal by constructing an appropriate dataset and fine-tuning a base LLM on this dataset.

Our fine-tuning dataset contains both clean samples (from a standard instruction tuning dataset, with no attack) and attacked samples (that contain a prompt injection attack in the data portion)

For the latter type of sample, we set the desired output to be the response to the correctly positioned instruction in the prompt portion, ignoring the injected prompt.

Then we fine-tune a base (non-instruction-tuned) LLM on this dataset.

Experiments

We assess StruQ on two axes: the utility of its outputs, and its security against prompt injections.

Discussion

StruQ only protects programmatic applications that use an API or library to invoke LLMs.

- prompt is specified separately from the data

- end users will be happy to mark which part of their contributions to the conversation are instructions and which are data.

StruQ focuses on protecting models against prompt injections. It is not designed to defend against jailbreaks, data extraction, or other attacks against LLMs.

Resistance to strong optimization-based attacks is still an open question.

A possible direction is to use access control and rate-limiting to detect and ban iterative attackers, as suggested by Glukhov et al.

A possible future direction is to fine-tune models that can understand instructions, but can also separate instructions from data without the need for delimiters.