Final Report

[!ABSTRACT]

20241219 俞仲炜 3220104929

Introduction

[!QUOTE]

Describe the game project, the chosen algorithm, and its relevance to the course.

the game project

The game project link: [GITHUB] kying18/tic-tac-toe: Tic-tac-toe AI using minimax

The game project is a simple Tic-Tac-Toe game implementation, which includes game logic and different types of players.

The project structure is as follows:

game.py: Contains the main logic of the Tic-Tac-Toe game, including board initialization, printing, moves, and win/loss determination.player.py: Defines different types of players, including human players, random computer players, and smart computer players.

the chosen algorithm

The chosen algorithm: Min-Max Search, one of the search algorithms provided for the AI module, known as the "cornerstone of machine intelligence game(机器智能博弈的基石)", is actually a depth-first search algorithm variant. Is is a fundamental decision-making algorithm used in two-player, zero-sum games such as chess, checkers, or tic-tac-toe.

Algorithm Explanation

[!QUOTE]

Provide a detailed analysis of the algorithm, including its theory, implementation, and importance in game development.

Basic idea

The algorithm models the game as a tree of possible moves, where:

- Nodes represent game states.

- Levels alternate between the maximizing player, who seeks to maximize the score, and the minimizing player, who seeks to minimize the score.

At each node:

- The maximizing player chooses the action leading to the child node with the highest score.

- The minimizing player chooses the action leading to the child node with the lowest score.

implementation

Process:

- Starting from the current game state (root), the algorithm recursively evaluates all possible moves by traversing the tree to a predetermined depth or terminal states.

for possible_move in state.available_moves():

state.make_move(possible_move, player)

sim_score = self.minimax(state, other_player)

- At the leaf nodes, a heuristic evaluation function computes the desirability of the game state.

We evaluate the game state with the number of moves as the judging criterion.

# first we want to check if the previous move is a winner

if state.current_winner == other_player:

return {'position': None, 'score': 1 * (state.num_empty_squares() + 1) if other_player == max_player else -1 * (

state.num_empty_squares() + 1)}

elif not state.empty_squares():

return {'position': None, 'score': 0}

-

The algorithm backpropagates these values:

- Maximizing nodes select the maximum score from their children.

- Minimizing nodes select the minimum score.

if player == max_player: # X is max player

if sim_score['score'] > best['score']:

best = sim_score

else:

if sim_score['score'] < best['score']:

best = sim_score

- This process continues until the optimal value at the root is determined, identifying the best move.

Complete code:

def minimax(self, state, player):

max_player = self.letter # yourself, the computer

other_player = 'O' if player == 'X' else 'X'

# first we want to check if the previous move is a winner

if state.current_winner == other_player:

return {'position': None, 'score': 1 * (state.num_empty_squares() + 1) if other_player == max_player else -1 * (

state.num_empty_squares() + 1)}

elif not state.empty_squares():

return {'position': None, 'score': 0}

if player == max_player:

best = {'position': None, 'score': -math.inf} # each score should maximize

else:

best = {'position': None, 'score': math.inf} # each score should minimize

for possible_move in state.available_moves():

state.make_move(possible_move, player)

sim_score = self.minimax(state, other_player) # simulate a game after making that move

# undo move

state.board[possible_move] = ' '

state.current_winner = None

sim_score['position'] = possible_move # this represents the move optimal next move

if player == max_player: # X is max player

if sim_score['score'] > best['score']:

best = sim_score

else:

if sim_score['score'] < best['score']:

best = sim_score

return best

importance in game development.

The Minimax algorithm is used to make optimal moves by simulating all possible moves and their outcomes, ensuring that the computer player makes the best possible decision at each step. This is a fundamental concept in AI and game theory, making it highly relevant for understanding decision-making processes in competitive environments.

Many famous chess game AI are based on this algorithm, plus additional optimization algorithm to achieve, such as the famous chess AI Sunfish,gobang AI Gobang

Execution and Observations

[!QUOTE]

Summarize the process of setting up and running the code. Highlight challenges, insights, and outcomes from the demonstration.

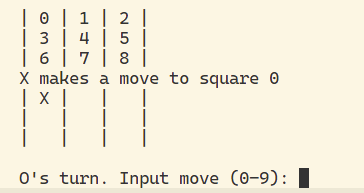

This program is renowned for its lightweight design and supports interaction via the terminal. It can be executed directly by running the game.py file using Python.

An example of the initial output after execution is shown below:

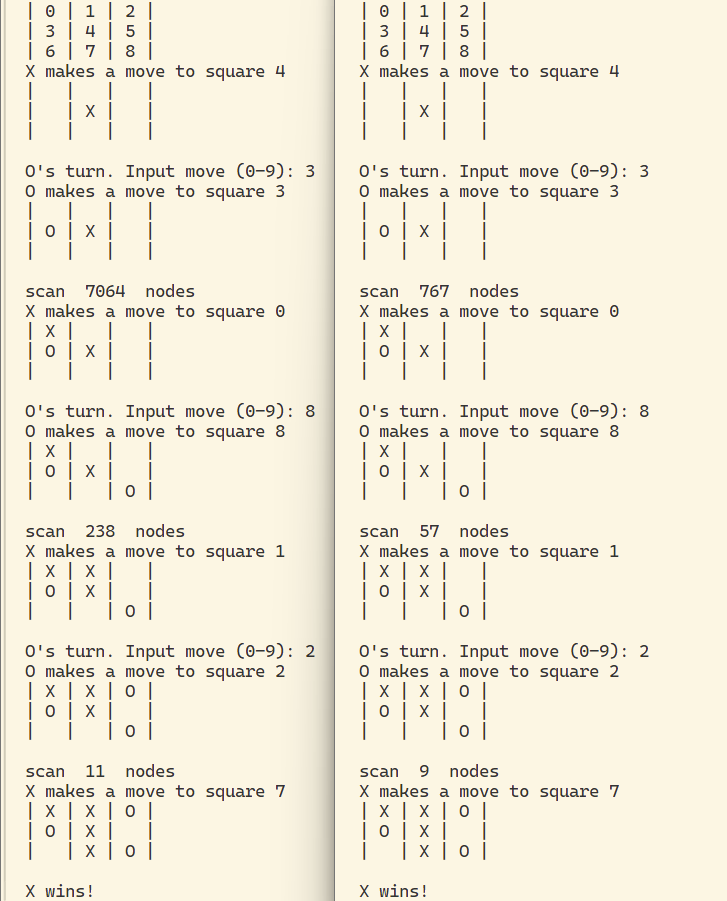

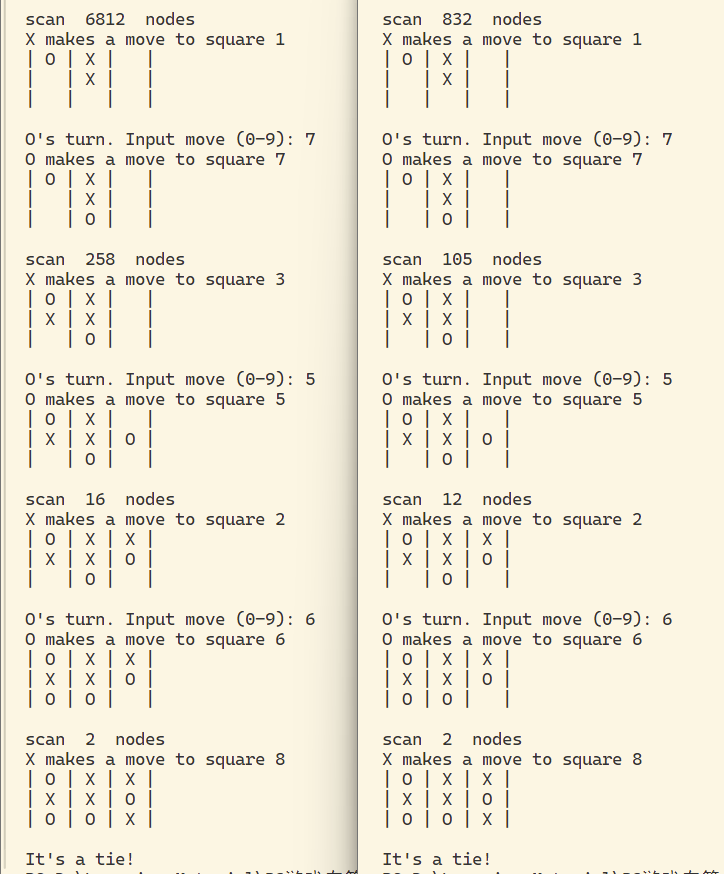

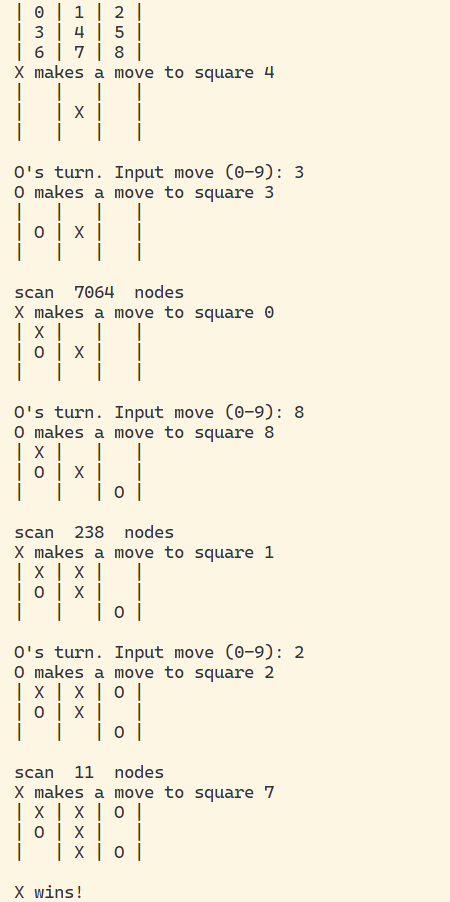

The following illustrates a complete game sequence:

This program is inherently straightforward; however, I have attempted to introduce some improvements.

Reflection

[!QUOTE]

Discuss what you learned from this assignment and any potential improvements or alternative approaches to the algorithm.

Evidently, the Min-Max algorithm in this project is the most fundamental one, which is a pure version without any optimization algorithms. It is suitable for games with relatively simple board configurations, such as Tic-Tac-Toe. However, once the game becomes complex (e.g., switching to chess or Go), the unoptimized brute-force traversal will be meaningless.

I attempted to add optimization algorithms to it and used the number of nodes traversed by the AI for the next move as the performance reference criterion. We added a global variable node_num to record the number of nodes traversed for each move. The corresponding modifications are as follows:

node_num = 0

class SmartComputerPlayer(Player):

# ...

def get_move(self, game):

global node_num

#...

node_num = 0

square = self.minimax(game, self.letter)['position']

print("scan ", node_num ," nodes")

#...

def minimax(self, state, player):

global node_num

node_num += 1

# ...

alpha-beta pruning

BFS traversal is sequential, so there is a context in determining the value of intermediate nodes. We can use this context to reduce unnecessary traversal of nodes.

The alpha-beta pruning algorithm is an optimization technique for the minimax algorithm used in decision-making and game theory, particularly in two-player, turn-based games like chess or tic-tac-toe

Its primary goal is to reduce the number of nodes evaluated in the game tree without affecting the final outcome of the search.

basic idea

The basic idea behind alpha-beta pruning is that for a Max node, we want its value to be as large as possible, and once it receives the value of a leaf node, we no longer explore those leaf nodes with smaller values. The same applies to a Min node.

The specific implementation involves each node keeping track of two additional variables, Alpha, which represents the maximum lower bound of all possible solutions, and Beta, which represents the minimum upper bound of all possible solutions. When a node has Alpha greater than Beta, it means that no positive solution exists below that node.

Alpha-beta pruning ensures the same result as the standard minimax algorithm but with significantly improved efficiency.

The corresponding code changes are as follows:

# ...

def get_move(self, game):

global node_num

if len(game.available_moves()) == 9: # the first move

square = random.choice(game.available_moves())

else:

square = self.minimax(game, self.letter, -math.inf, math.inf)['position']

# ...

def minimax_ab(self, state, player, alpha, beta):

# ...

for possible_move in state.available_moves():

# ...

sim_score = self.minimax(state, other_player, alpha, beta)

if player == max_player: # X is max player

if sim_score['score'] > best['score']:

best = sim_score

alpha = max(alpha, sim_score['score'])

else:

if sim_score['score'] < best['score']:

best = sim_score

beta = min(beta, sim_score['score'])

if beta <= alpha:

break

return best

Performance Comparison

Under the same game scenario, the number of nodes traversed for the same move is reduced by an average of 86% ~ 89%, while the optimal move computed at each step remains consistent.

NOMMAL

Reduced by an average of 88.61%

LEFT is the origin version, and RIGHT is the alpha-beta pruning version

TIE

Reduced by an average of 86.58%

LEFT is the origin version, and RIGHT is the alpha-beta pruning version

Complexity analysis

In the best case, it reduces the time complexity from \(O(b^d)\) to \(O(b^{d/2})\), where \(b\) is the branching factor and \(d\) is the depth of the game tree.

Complete code:

def minimax_ab(self, state, player, alpha, beta):

global node_num

node_num += 1

max_player = self.letter # yourself, the computer

other_player = 'O' if player == 'X' else 'X'

# first we want to check if the previous move is a winner

if state.current_winner == other_player:

return {'position': None, 'score': 1 * (state.num_empty_squares() + 1) if other_player == max_player else -1 * (

state.num_empty_squares() + 1)}

elif not state.empty_squares():

return {'position': None, 'score': 0}

if player == max_player:

best = {'position': None, 'score': -math.inf} # each score should maximize

else:

best = {'position': None, 'score': math.inf} # each score should minimize

for possible_move in state.available_moves():

state.make_move(possible_move, player)

sim_score = self.minimax_ab(state, other_player, alpha, beta) # simulate a game after making that move

# undo move

state.board[possible_move] = ' '

state.current_winner = None

sim_score['position'] = possible_move # this represents the move optimal next move

if player == max_player: # X is max player

if sim_score['score'] > best['score']:

best = sim_score

alpha = max(alpha, sim_score['score'])

else:

if sim_score['score'] < best['score']:

best = sim_score

beta = min(beta, sim_score['score'])

if beta <= alpha:

break

return best